Windows CUDA Inference

Windows CUDA Inference

The system requires a CUDA-related runtime environment, installation of the CUDA driver and TensorRT support library, and acquisition of model files.

Install the CUDA Driver For Windows

The CUDA driver comes with its own GPU driver, so there is no need to install the GPU driver separately before installing the CUDA driver.The currently used version of CUDA is CUDA_11.8.0_522.06_windows.

You can access the following link to download https://developer.nvidia.com/cuda-downloads

You can also directly click the following link to download:

After downloading, simply install it using the default configuration.

Installation Of Windows TensorRT Support Library

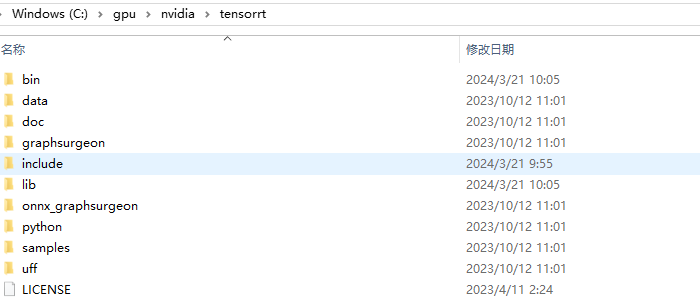

Contact technical support to obtain the tensorrt.zip package, and extract the package to C:\gpu\nvidia\tensorrt. The final directory structure is as follows:

Model File Installation

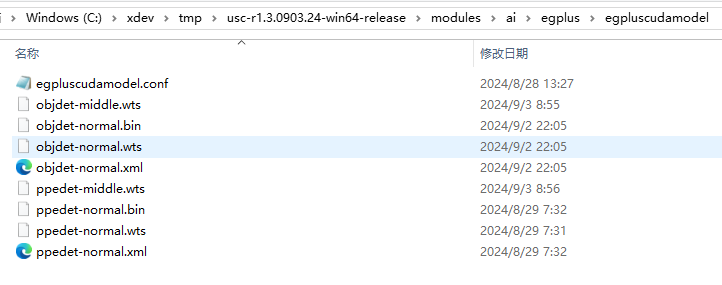

Contact technical support to obtain the model file package egplus.zip, and place the files in egplus\egpluscudamodel into the modules\ai\egplus\egpluscudamodel directory. The final directory structure is as follows:

After the installation is complete, restart the USC service and navigate to Analysis-》Settings-》Inference Service Configuration. You should be able to view the CUDA driver version and the CUDA runtime version. Please refer to the figure below for reference:

The system will generate an optimized model based on the GPU model during the first startup, which takes about 5 minutes. There will be a prompt in the Analyze-》Settings-》Inference Service Status, as shown in the following figure: